Preparing Admissions Employees to Counsel Prospective Students

SCENARIO-BASED ILT/VILT

Overview

Audience

Admissions Department employees, including counselors, senior counselors, and department leadership, at a small, private, urban university

Responsibilities

Instructional design, collaboration with subject matter expert (SME), course design and development, facilitation, evaluation

Tools Used

Canva, Adobe Stock, Microsoft applications including Word, PowerPoint, Forms, & Teams, Zoom

Problem & Solution

The Admissions Department reached out to request that our division, Digital Technology Services (DTS), participate in their annual slate of trainings, to prepare them to counsel potential students on our university’s services:

In spite of explicitly requesting training, they seemed to expect an informational presentation (and indeed, in past years, that was how it had been delivered). They further specified their preferred modality, based on their team’s limited availability during this busy period: a 45-minute instructor-led training.

Upon consideration, I decided to design a more active skills-based learning experience. The objective was for learners to accurately and appropriately describe key functions of DTS to prospective students, just as they would need to do in the course of their job.

In the instructor-led training, employees would encounter several prospective student personas, each of whom would have different technology needs—reflecting the various needs their actual prospective students might have.

Then, using a one-page reference sheet of the most impactful DTS services and resources, learners would decide what information they would share with each student to best meet their needs.

Image description: Excerpt from an email that reads, “Admissions training ask: On behalf of the Admissions Office, I would like to invite you to take part in one of our summer trainings. We hold training sessions with different departments throughout the university, which helps us relay information to prospective students and families. [In bold] We would like to hear the highlights of your office, as well as what you think prospective students and families should know about the resources you have to offer. This is your best opportunity to share the most up-to-date information about your department with Admissions staff before we begin recruitment in the fall.

Process

Kickoff Call

I focused my kickoff call with the SME in my department on honing in on the most important information about department services and resources for Admissions staff and prospective students.

We began by creating a comprehensive list of all possible information about DTS to share, which validated for both me and my SME that it was more than could reasonably be conveyed and assessed in a 45-minute training.

Image description: An excerpt of our kickoff meeting notes, identifying a comprehensive list of all possible department resources for students. This list includes sections for “Tech Spot,” “myWentworth app for students,” and “Enterprise” systems.

Course Design

After the kickoff call, I considered what I had learned about the training need:

Learners needed to be able to apply the information they learned on the job, not just memorize it

Our modality was limited to a 45-minute ILT, per the department’s request

There was too much information to convey and assess for the scope of the training, or even to expect learners to memorize for use on the job

To meet those needs, I wrote a course design document around a single learning objective: “Students will be able to (SWBAT)... using a reference sheet, accurately and appropriately describe key functions of DTS to prospective students.”

In working toward this objective, students wouldn’t need to memorize all functions. The reference sheet with key information would mimic on-the-job conditions in which learners might refer to their notes or other materials.

Likewise, describing key functions to prospective student personas aligns with employees’ actual job duties, promoting engagement and retention.

The use of scenarios (with the personas) is also a good fit here, as it allows learners to practice a necessary skill in a more low-stakes environment.

Finally, after some thought, I decided to structure the training as a stations activity, in which learners would circulate around the room to engage with the personas. I thought this was in keeping with trying to make the training more active and engaging and would give learners more opportunity to engage with the information.

I met with my SME again to review the design document, which he excitedly agreed to.

Image description: The first page of the DTS Admissions Training 2023 Course Design Document. The page displays three sections: a table of contents, learning objectives, and training overview.

Development

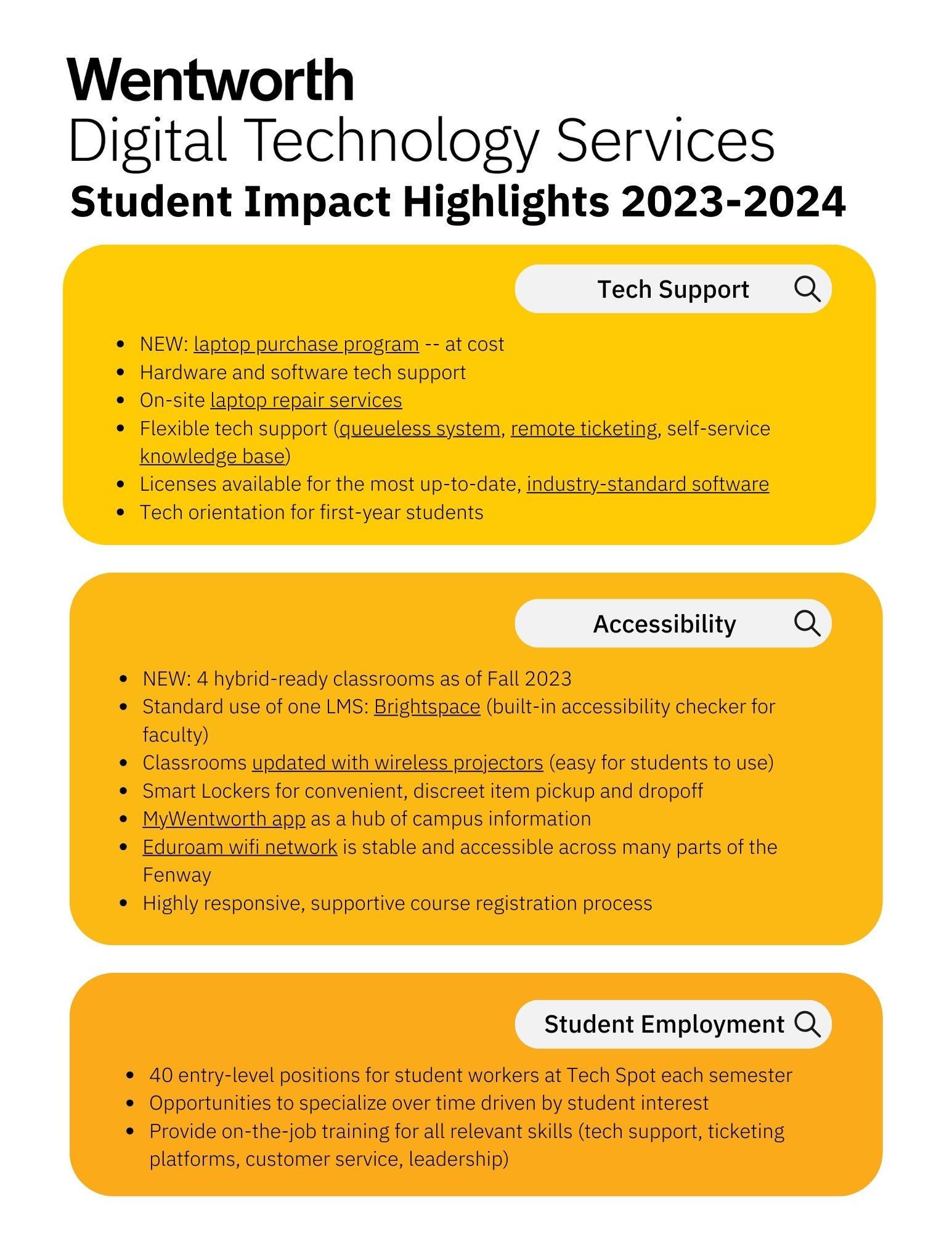

When the time came for development, I reviewed my list of DTS services and identified some that I thought prospective students would be most likely to be interested in, or most impactful on them. From there, I curated that information in a one-page infographic, which I designed in Canva.

Image description: An infographic titled “Wentworth Digital Technology Services Student Impact Highlights 2023-2024.” The document is broken up into three sections, each of which appears against a gold or orange text box stacked on top of each other. The sections are “Tech Support,” “Accessibility,” and “Student Employment.” They contain bulleted lists of important information for prospective students.

Next, I wrote several student personas with descriptions targeted to elicit different parts of that information from my learners. For example, for the persona of Anisha below, learners might read the onesheet and want to tell Anisha about accessibility features in university platforms like the learning management system. For Martin, they might share that DTS has a remote tech support ticketing system, so he can request and get technology help even when off campus.

I validated all the personas and their technology needs with my SME.

Image description: Two prospective student personas, one for Anisha (she/her) and one for Martin (he/him). Anisha is a prospective international student who is visually impaired. She is interested to find out about the school’s accessibility technology and ensuring that she purchases the right equipment. Martin is a working professional exploring a hybrid graduate program. He’s concerned that as a hybrid student, he may not get the technical support he needs, especially as someone without significant experience with technology.

The preparation for this training was pleasantly old-school, relying on paper copies of the onesheet/notes and the prospective student personas.

To further bring the personas to life for my learners, I downloaded photos from Adobe Stock to accompany each persona, which I printed on a plotter to display next to the written description.

Lastly, I put together a PowerPoint deck to guide our learners through the experience.

Image description: Photo of persona Liv, a dark skinned person with short hair, wearing a white tank top under a leopard print wrap shirt and dark green pants. They’re crossing their arms and smiling confidently while looking at the camera. They’re in front of a bright orange background.

Image description: The agenda slide from the training, dated August 16, 2023. The agenda is 1. Introductions, 2. Stations, 3. Discussion, 4. Q&A, and 5. Followup.

Implementation

Facilitating this training was the ultimate exercise in flexibility, as just about every logistical thing that could go wrong did.

First, our reserved room had been double booked, so we were forced at the last minute to find a different space and communicate the change to our learners. When they arrived, they informed us that although we had planned exclusively for an in-person training (per their request), there would in fact be several employees joining virtually. And finally, as we initiated the Zoom call, we discovered that the Zoom client on the AV equipment required an update that errored out.

Before kicking off the training, I quickly updated our deck to include our virtual attendees, and when the AV equipment failed, I logged on to Zoom from my laptop to simultaneously facilitate them from there.

In spite of these many hiccups, our learners remained engaged. They carefully read the personas and utilized the onesheet to identify technology resources to meet the personas’ needs. At the end of the training, they thoughtfully shared their findings with the larger group, making insightful recommendations that I hadn’t anticipated.

Image description: PowerPoint slide titled “Stations.” There are two columns containing bulleted directions for learning. The first column reads, “In-Person: Circulate stations around the room individually or small groups (your choice!) to read student personas. Use ‘Student Impact Highlights’ onesheet to pull relevant info for that student. Make notes on ‘Your Recommendations’ sheet. Josh will be available for questions.” The second column reads, “Virtual: Work in a breakout room and have 1 person read each persona aloud. Use ‘Student Impact Highlights’ onesheet to pull relevant info for that student. Make notes anyway (no virtual handout, sorry!). Megan will be available for questions.”

Results & Takeaways

Evaluation Using the Kirkpatrick Model of Training Evaluation

Level 1: Reaction

Using the Kirkpatrick Model, it’s clear that at Level 1: Reaction, the training was successful:

100% of learners agreed or strongly agreed with the statement “I feel that the training will be essential to my success in informing students about DTS.”

100% of leaners strongly agreed that they would recommend the training to their colleagues (net promoter score)

Qualitative feedback included, “I really enjoyed this training, it was very informative.”

Level 2: Learning

At Level 2: Learning, all participants demonstrated that they had acquired the intended skill, based on their engagement and participation. However, the learners were at times working on the scenarios in pairs or groups. For a purer individual assessment, I would need to structure the training differently—for example, I might have them work through one scenario collaboratively, with a partner or group, as an opportunity to practice the skill. Then I could assess them individually on that same skill, perhaps by having them analyze a second scenario that they present to their partner, or submit as a more formal assessment. Time constraints made that approach not viable this time.

Level 3: Behavior

Evaluating Level 3: Behavior would require additional followup and collaboration with the Admissions Office, which I did not conduct last year given my capacity. But to evaluate behavior changes, I certainly could have followed up with the team several weeks later to assess whether they had been successfully using the onesheet to counsel prospective students. The nature of their work (involving significant travel) would make on-the-job observation challenging, so this would likely need to take the form of a self-reported evaluation form.

Level 4: Results

The Results level for this training would be challenging to evaluate, given that this was just one training in a larger slate of cross-departmental trainings provided to this team. It would be interesting to look closely at student application rates for those counselors who participated in the training year over year, but even were the comparison favorable, it would be hard to know how much of that is attributable to this specific learning experience.

Other Reflections on Training Structure and Implementation

Come Prepared with a Plan B for Virtual Attendees

The last minute notification of remote attendees was tough, as I had in no way designed this experience for a virtual audience. I did my best to include them, but during the discussion portion, they were definitely less participatory than in-person attendees. Now that I know better how many people choose to work from home on short notice, I would definitely develop a plan B for virtual folks, just in case.

Expert Groups Instead of Stations

During the design stage, I debated whether to have learners engage with the scenarios in expert groups (i.e., assign one scenario to one group, which they would then share out with the larger group) or stations. As mentioned above, I ultimately decided on the stations approach, to leverage the in-person nature of the training and get my learners thinking on their feet. Structuring the scenarios as stations also allowed all learners to engage with all the student personas, and therefore, use more of the information on their reference sheet.

In hindsight, I might consider using the expert group approach instead. It might prompt deeper engagement with targeting information for each persona and therefore greater retention of those specific details. And in the event of a hybrid audience (as discussed above), it would certainly translate more easily.

Experiment with Scenario Mechanism

As with any training that involves scenarios, there are many ways to ask learners to engage. I opted for written scenarios, but another option would be role play, eliciting learners to actually perform the skill in the way that they would perform it on the job, by directly counseling a “prospective student” on the university (with the written scenarios, they instead described what information they would share).

Another intriguing option would be to leverage AI technology, like the chatbot Poe. In that case, I would train Poe with the relevant knowledge base in advance, and then learners could interact with Poe in a written chat form—again, closer to the authentic task as it’s performed on the job, but perhaps not as high-pressure as a role play activity with colleagues. This would not necessarily be the best fit for an ILT, although it could be leveraged at the end of the training as a form of assessment, or a few weeks after the training as a quick refresher to promote retention and behavior change over time.

The AI chatbot scenario method is also flexible and highly scalable for delivery to a remote and/or asynchronous audience, as needed.